Polarized imaging technique improves 3D scanning resolution by 1000 times

By Design Engineering staff

Additive Manufacturing CAD/CAM/CAE General 3D scanningMIT researchers combining polarized lens photography with depth camera data to boost captured resolution beyond that of professional grade 3D scanners.

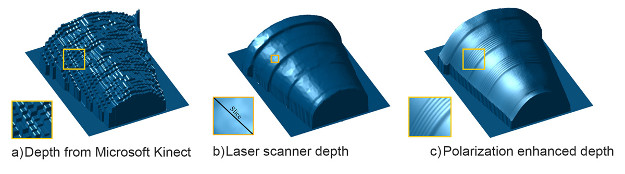

By combining the information from the Kinect depth frame in (a) with polarized photographs, MIT researchers reconstructed the 3-D surface shown in (c). Polarization cues can allow coarse depth sensors like Kinect to achieve laser scan quality (b). (Photo credit: MIT Media Lab)

“Today, they can miniaturize 3D cameras to fit on cell phones,” says Achuta Kadambi, an MIT graduate student in media arts and sciences and one of the system’s developers. “But they make compromises to the 3D sensing, leading to very coarse recovery of geometry. That’s a natural application for polarization, because you can still use a low-quality sensor, and adding a polarizing filter gives you something that’s better than many machine-shop laser scanners.”

The process begins with a depth map of an object captured by a 3D camera like that in Microsoft’s latest Kinect. While a rough approximation, the map provides a basic geometric structure of the object. In similar techniques, that data is combined with photometric or shading imagery to refine the model’s resolution.

MIT team’s Polarized 3D, however, takes advantage of the way light’s polarization is effected when it bounces off an object. Light waves striking an object at an angle, for example, reflect a limited set of polarized orientations than those waves that strike the object directly. By analyzing the polarization of the reflected light, the surface normals of the object can be estimated. This is accomplished by taking three 2D photos with a polarized lens that’s rotated to different angles and comparing the light intensities of the resulting images.

Although the nature of polarization has long been known and exploited, it traditionally hasn’t been used on its own for 3D imagining due to a number of limitations, the researchers say. For example, one orientation of a light wave (e.g. up/down, left/right, etc.) “looks” the same to a polarized lens as its exact opposite, which leads to ambiguous surface normals. In addition, deriving shape from polarization alone suffers from refractive distortion and any light bouncing off an object and toward the camera at a near zero angle introduces noise to the data captured.

However, the researches say that combining normals derived from polarization with the rough depth map overcomes most of these challenges. What’s more, the resulting model resolution is significantly higher than using a 3D camera on its own. While the Kinect can resolve features down to approximately a centimeter, adding polarization data boosted the resolution to tens of micrometers. In addition, the Polarization 3D technique works equally well on highly reflective objects imaged and in uncontrolled lighting.

Even so, the researchers say the process of combining the two kinds of image data to create accurate surface geometry is computationally intensive but can be carried out in real time using graphics processors used on high end video cards. In addition, using the technique in a cell phone camera or other hands-off application would require the use of special grid-like polarization filters.

The MIT Media Lab team’s paper, to be presented at the International Conference on Computer Vision in December, also hints that the technique could help self-driving cars navigate in rain, snow or fog. While reliable under normal conditions, self-driving cars can become frazzled when water particles scatter light in unpredictable ways.

“Mitigating scattering in controlled scenes is a small step,” Kadambi says. “But that’s something that I think will be a cool open problem.”

www.media.mit.edu