Researchers turn anything into a touch screen

By Design Engineering Staff

Electronics NSERC research and developmentCarnegie Mellon innovation combines depth cameras and projectors to create interactive interfaces on virtually any surface.

Research conducted at Carnegie Mellon University (CMU), funded in part by the National Research Council of Canada, have produced a depth camera/projector system that can turn virtually any surface into a touch screen interface with nothing more than a gesture.

Using CMU’s WorldKit system, for example, someone could rub the arm of a sofa to “paint” a remote control for her TV or swipe a hand across an office door to post his calendar from which subsequent users can “pull down” an extended version. In addition, these ad hoc interfaces can be moved, modified or deleted with similar gestures.

Researchers at Carnegie Mellon’s Human-Computer Interaction Institute (HCII) used a ceiling-mounted Microsoft Kinect and projector to record room geometries, sense hand gestures and project images on desired surfaces. But Robert Xiao, an HCII doctoral student, said WorldKit does not require such an elaborate installation.

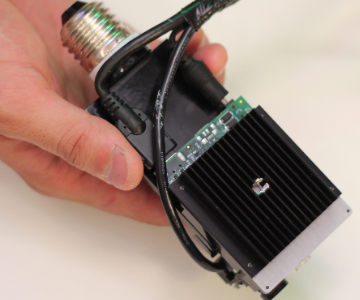

“Depth sensors are getting better and projectors just keep getting smaller,” he said. “We envision an interactive ‘light bulb’ — a miniaturized device that could be screwed into an ordinary light fixture and pointed or moved to wherever an interface is needed.”

The system does not require prior calibration, but instead automatically adjusts its sensing and image projection to the orientation of the chosen surface. Users can summon switches, message boards, indicator lights and a variety of other interface designs from a menu. Ultimately, the WorldKit team anticipates that users will be able to custom design interfaces with gestures.

The system does not require prior calibration, but instead automatically adjusts its sensing and image projection to the orientation of the chosen surface. Users can summon switches, message boards, indicator lights and a variety of other interface designs from a menu. Ultimately, the WorldKit team anticipates that users will be able to custom design interfaces with gestures.

Xiao developed WorldKit with Scott Hudson, an HCII professor, and Chris Harrison, a Ph.D. student. They will present their findings April 30 at CHI 2013, the Conference on Human Factors in Computing Systems, in Paris.

Though WorldKit now focuses on interacting with surfaces, future work may enable users to interact with the system in free space. Likewise, higher resolution depth cameras may someday enable the system to sense detailed finger gestures.

“We’re only just getting to the point where we’re considering the larger questions,” Harrison said.

The CMU research was sponsored in part by a Qualcomm Innovation Fellowship, a Microsoft Ph.D. Fellowship and grants from the Heinz College Center for the Future of Work, the Natural Sciences and Engineering Research Council of Canada and the National Science Foundation.

www.cmu.edu