Computer scientists to develop ‘human-like’ vision systems

By DE staff

General Research University vision systemUS/Canadian scientist to collaborate on $16 million smart vision project.

Based on research of how humans see, a coalition of US and Canadian engineering researchers announced plans to create a cognitive vision system that mimics the neural processing of primates.

Headed by University of Southern California researcher, Laurent Itti, the "neuromorphic visual system for intelligent unmanned sensors" project is a $16-million Defense Advanced Research Project Agency effort to build machines that see the world in the way he discovered humans see.

Built upon a previous effort called Neovision, the new project will try to build its eyes from the bottom up, from scratch, finding out more precisely how these neural systems function.

"The modern battlefield requires that soldiers rapidly integrate information from a multitude of sensors," project description reads. "A bottleneck with existing sensors is that they lack intelligence to parse and summarize the data. Our goal is to create intelligent general-purpose cognitive vision sensors inspired from the primate brain, to alleviate the limitations of such human-based analysis."

At issue is the fact that primate vision evolved to seek out specific visual signals critical to survival. This involves complex circuitry in the retina, where the outputs from light detector cells are processed to give rise to 12 different types of visual "images" of the world (as opposed to the red, green and blue images of a standard camera). These images are further processed by complex neural circuits in the visual cortex, and in deep-brain areas including the superior colliculus, with feedbacks driving eye movements.

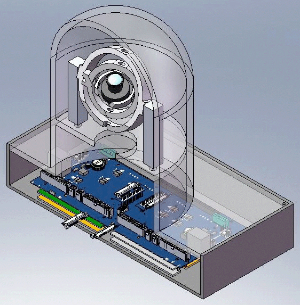

The research plan is to model the entire complex interactive system from the ground up to understand the exact messages transmitted from the retina to the cortex and further to the colliculus, and how the brain cells understand them. Then they will attempt to embed parallel transactions, using the same perception algorithms, into working silicon systems.